I’ve surprised many people when I tell them my Android lasts less than 8 hours under heavy use. And, I’ve lost track of how many times I’ve asked someone how long their smartphone lasts and they really don’t know. The most common response goes something like this “…not sure because I plug it in whenever I get a chance.” Some of my friends even carry separate rechargeable backup battery packs to augment their limited battery life. As soon as their battery gets down to around a quarter tank they plug in the mega backup.

As a developer who builds apps for smartphones, I’ve spent quite a bit of time becoming very familiar with many of the configurable aspects of my various phones all the while using it intensively in build/debug cycles. And, I’ve put some thought into categorizing the different types of battery power usage of which some are less obvious than others. So here goes:

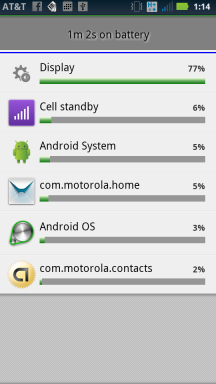

- Screen brightness. I turn it all the way down, but this does make reading the screen outside in bright daylight nearly impossible. The fact is the brighter the screen , the more pull on the battery. On all my phones, the screen is the biggest gas hog.

- Number of applications loaded into memory. Very few of us pay attention to how many applications are loaded/running versus completely shut down. The more running apps the phone has to manage the more battery is drawn.

- Cellular signal strength. If your phone has a weak signal it will boost its own radio to try and compensate. Cell phone signals are not constant and they ebb and flow all the time. On the down side when signals are weak, the phone will expend additional power to try and keep you connected. Sure, use wireless (wifi) were possible, but when wifi isn’t available you have to rely on good old cellular. It’s commonly understood that 4G draws the most power, 3G draw less and wifi draws the least.

- Duty-cycle for using apps. What I mean by this is how much time you use apps during the time period between charges. If you spend 2 solid hours of app play time between 8 hour charge cycles then that means a duty cycle of 25% (2 divided by 8). The lower the duty cycle the less power is drawn assuming an idling phone with no apps installed is the baseline for minimal power usage.

- Number of applications that continuously connect to the internet. This includes Twitter, facebook, FourSquare, email, etc. Secretly these apps can be very talkative in the background and you might not even know it. Every time they ask the mother ship for an update it draws power to make the request over the internet and then process the results.

- Talk-time. Everyone knows that talk time uses battery power, so this is obvious compared to items 1 – 5. On a smartphone you are usually doing other things in addition to talk time. In the days of feature phones, such as the original Motorola Razr, the only thing you could do with those was talk, talk, talk and intermix that with some limited texting. At least for me, over the period of 8 hours I’ve spent much more time (or duty cycle) using apps, such as email, as compared to making phone calls.

Hopefully this takes some of the mystery away from short battery life. We all wish batteries could last days, but we unconsciously create situations within our phones that draws down the battery much faster than expected. And, their are situations beyond our control. such as low cell signal strength, that draw extra power.